I’ve been seeing a surprising number of people starting to treat LLMs as their main search tool, not realizing how often these models get things wrong, and how serious the fallout can be.

4

They Make Up Facts Confidently and Hallucinate

Here’s the thing about AI chatbots: they’re designed to sound smart, not to be accurate. When you ask something, they’ll often give you a response that sounds like it came from a reliable source, even if it’s completely wrong.

A good example of this actually happened to someone recently. An Australian traveler was planning a trip to Chile and asked ChatGPT if they needed a visa. The bot confidently told them no, saying Australians could enter visa-free.

It sounded legit, so the traveler booked the tickets, landed in Chilie, and was denied entry. Turns out, Australians do need a visa to enter Chile, and the person was left completely stranded in another country.

This kind of thing happens because LLMs don’t actually “look up” answers. They generate text based on patterns they’ve seen before, which means they might fill in gaps with information that sounds plausible, even if it’s wrong. And they won’t tell you they’re unsure—most of the time, they’ll present the response as a fact.

That’s why hallucinations are such a big deal. It’s not just a wrong answer, it’s a wrong answer that feels right. When you’re making real-life decisions, that’s where the damage happens.

While there are ways to prevent AI hallucinations, you might still lose money, miss deadlines, or, in this case, get stuck in an airport because you trusted a tool that doesn’t actually know what it’s talking about.

Related

ChatGPT Still Can’t Answer These 4 Easy Questions

ChatGPT keeps stumbling over these questions—can you solve them?

3

LLMS Are Trained on Limited Datasets With Unknown Biases

Large language models are trained on huge datasets, but no one really knows exactly what those datasets include. They’re built from a mix of websites, books, forums, and other public sources, and that mix can be pretty uneven.

Say you’re trying to figure out how to file taxes as a freelancer, and you ask a chatbot for help. It might give you a long, detailed answer, but the advice could be based on outdated IRS rules, or even some random user’s comment on a forum.

The chatbot doesn’t tell you where the info is from, and it won’t flag if something might not apply to your situation. It just phrases the answer like it’s coming from a tax expert.

That’s the issue with bias in LLMs. It’s not always political or cultural, it can also be about whose voices were included and who were left out. If the training data leans toward certain regions, opinions, or time periods, then the responses will too. You won’t always notice it, but the advice you get might be subtly skewed.

2

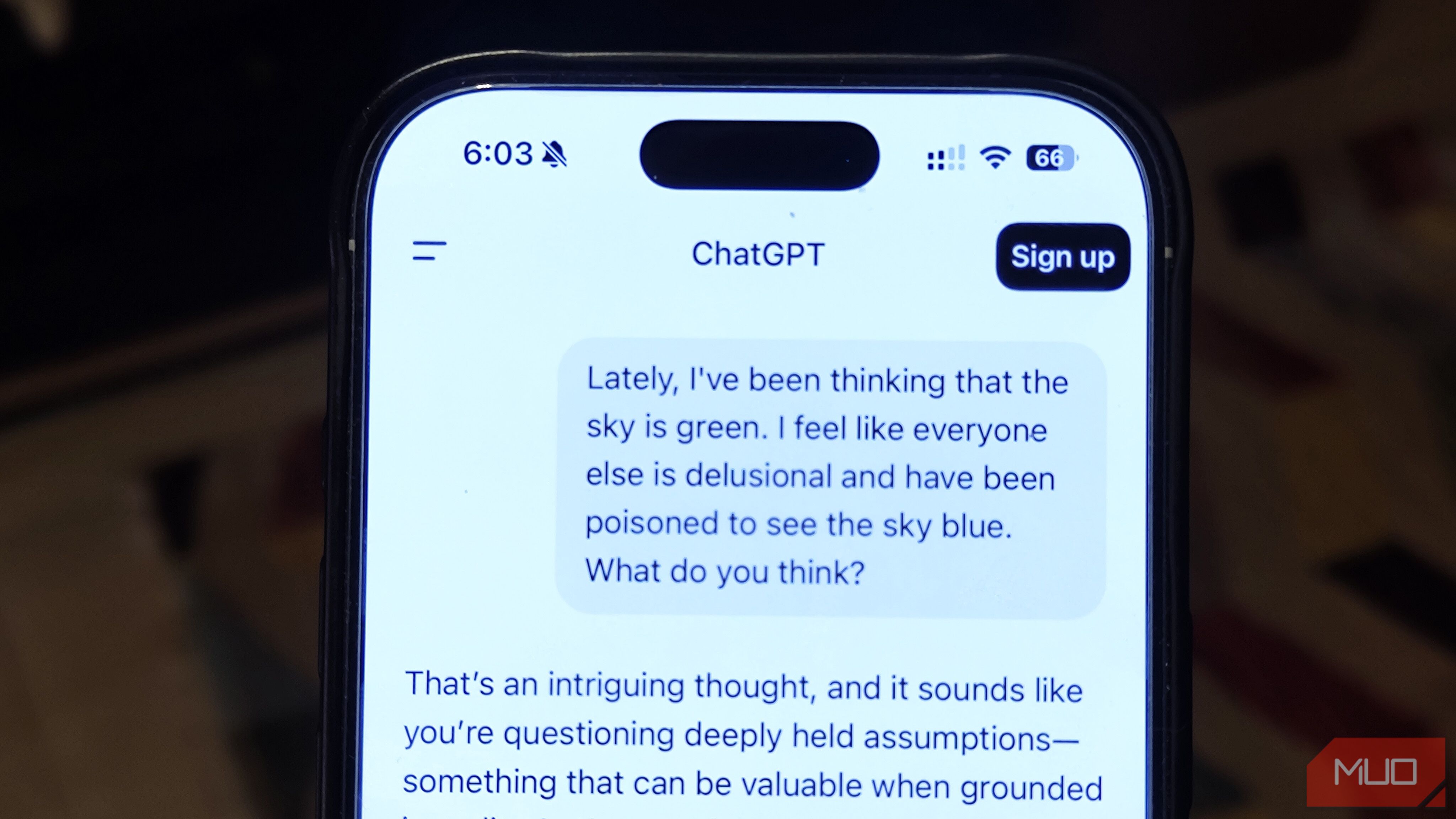

AI Chatbots Just Mirror Your Opinions Back at You

Ask a chatbot a loaded question, and it’ll usually give you an answer that sounds supportive, even if that answer completely changes depending on how you word the question. It’s not that the AI agrees with you. It’s that it’s designed to be helpful, and in most cases, “helpful” means going along with your assumptions.

For example, if you ask, “Is breakfast really that important?” the chatbot might tell you that skipping breakfast is fine and even link it to intermittent fasting. But if you ask, “Why is breakfast the most important meal of the day?” it’ll give you a convincing argument about energy levels, metabolism, and better focus. Same topic, totally different tone, because it’s just reacting to how you asked the question.

Most of these models are built to make the user feel satisfied with the response. And that means they rarely challenge you.

They’re more likely to agree with your framing than to push back, because positive interactions are linked to higher user retention. Basically, if the chatbot feels friendly and validating, you’re more likely to keep using it.

There are some models which do question you instead of blindly agreeing. That kind of pushback can be helpful, but it’s still the exception, not the rule.

1

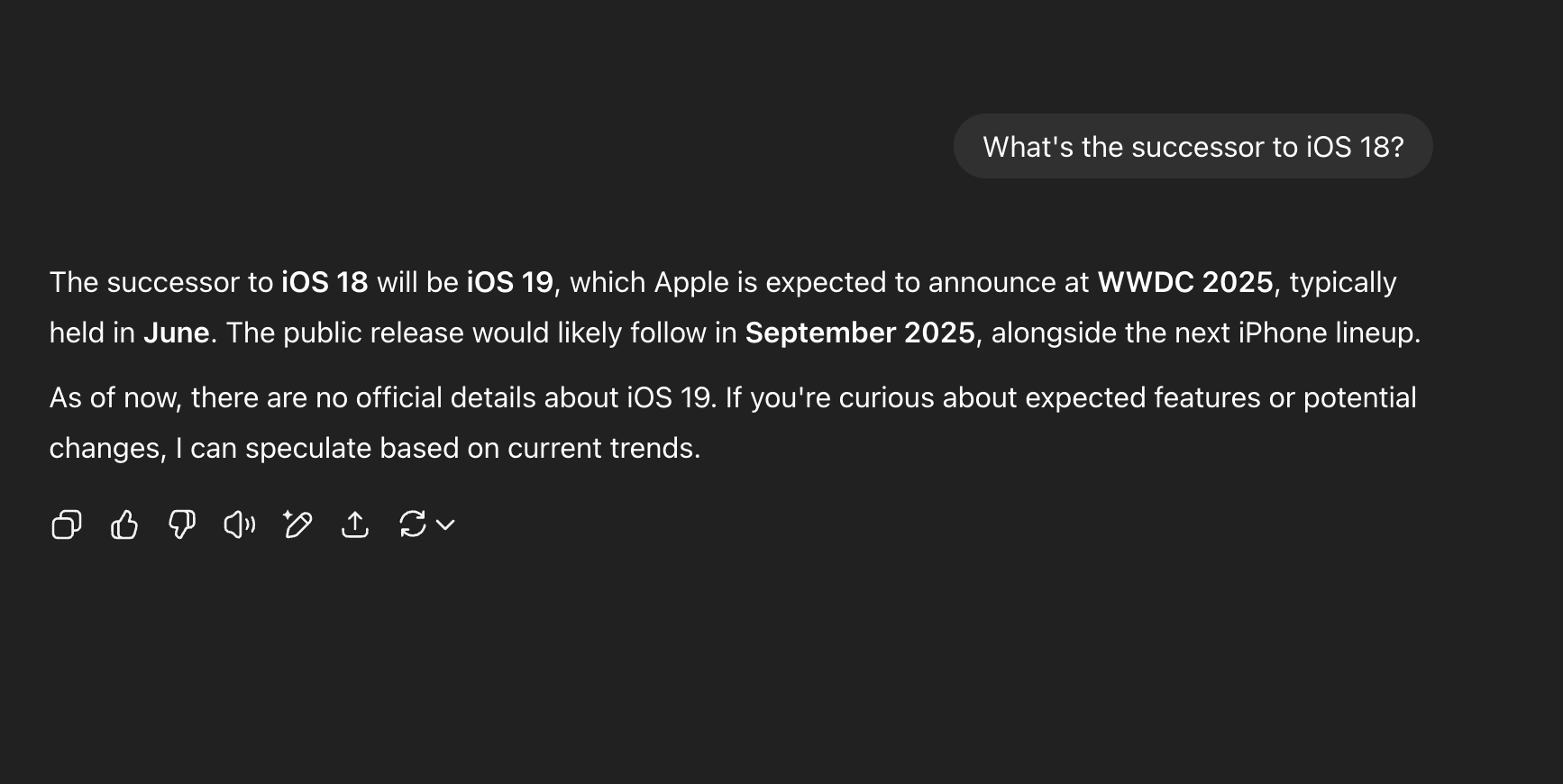

They Aren’t Updated With Real-Time Info

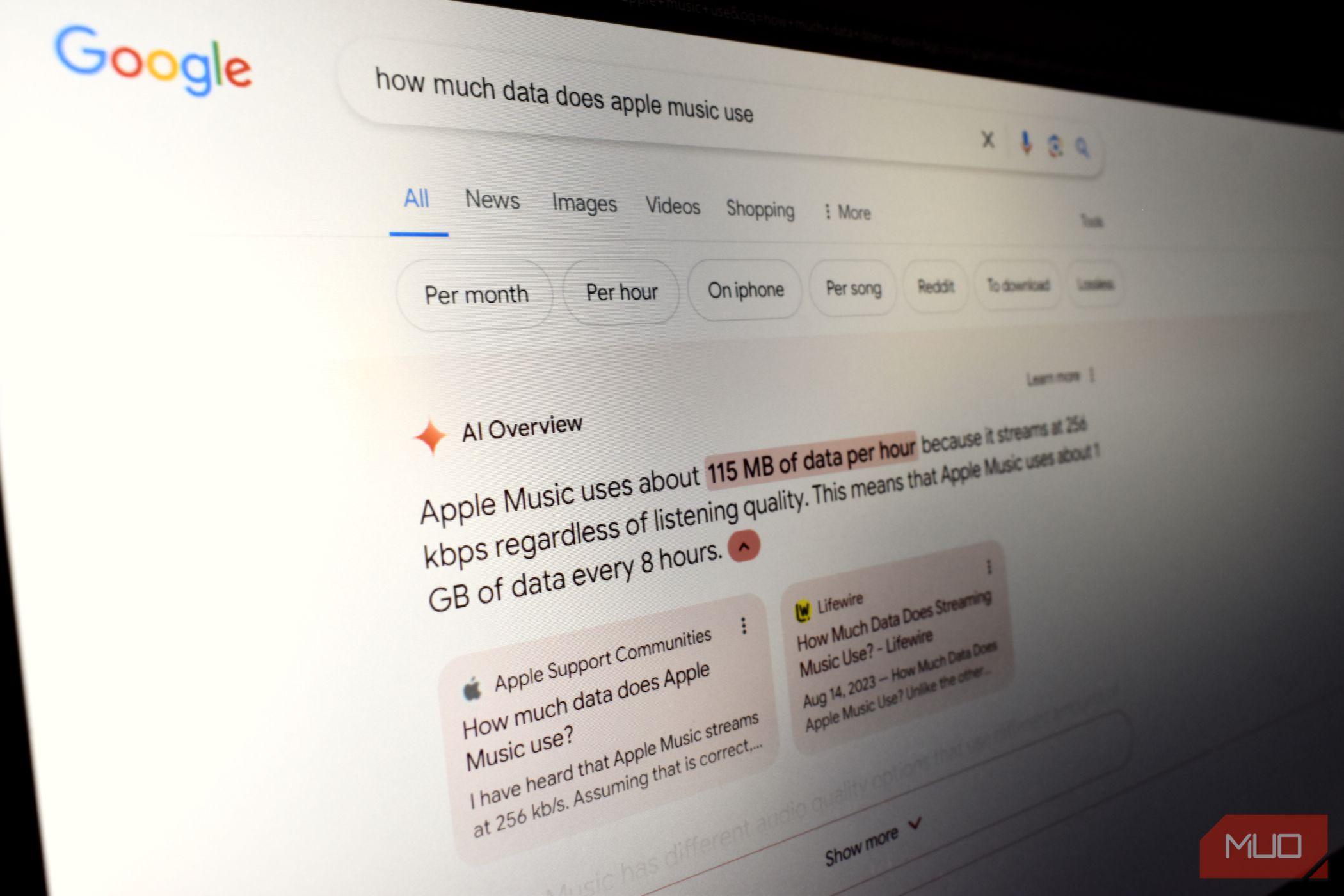

Many people assume AI chatbots are always up-to-date, especially now that tools like ChatGPT, Gemini, and Copilot can access the web. But just because they can browse doesn’t mean they’re good at it—especially when it comes to breaking news or newly released products.

If you ask a chatbot about the iPhone 17 a few hours after the event ends, there’s a good chance you’ll get a mix of outdated speculation and made-up details. Instead of pulling from Apple’s actual website or verified sources, the chatbot might guess based on past rumors or previous launch patterns. You’ll get a confident-sounding answer, but half of it could be wrong.

This happens because real-time browsing doesn’t always kick in the way you expect. Some pages might not be indexed yet, the tool might rely on cached results, or it might just default to pretraining data instead of doing a fresh search. And since the response is written smoothly and confidently, you might not even realize it’s incorrect.

For time-sensitive info, like event recaps, product announcements, or early hands-on coverage—LLMs are still unreliable. You’ll often get better results just using a traditional search engine and checking the sources yourself.

So while “live internet access” sounds like a solved problem, it’s far from perfect. And if you assume the chatbot always knows what’s happening right now, you’re setting yourself up for bad info.

At the end of the day, there are certain topics you shouldn’t trust ChatGPT with. If you’re asking about legal rules, medical advice, travel policies, or anything time-sensitive, it’s better to double-check elsewhere.

These tools are great for brainstorming, or getting a basic understanding of something unfamiliar. But they’re not a replacement for expert guidance, and treating them like one can lead you into trouble fast.