The subtitle of the doom bible to be published by AI extinction prophets Eliezer Yudkowsky and Nate Soares later this month is “Why superhuman AI would kill us all.” But it really should be “Why superhuman AI WILL kill us all,” because even the coauthors don’t believe that the world will take the necessary measures to stop AI from eliminating all non-super humans. The book is beyond dark, reading like notes scrawled in a dimly lit prison cell the night before a dawn execution. When I meet these self-appointed Cassandras, I ask them outright if they believe that they personally will meet their ends through some machination of superintelligence. The answers come promptly: “yeah” and “yup.”

I’m not surprised, because I’ve read the book—the title, by the way, is If Anyone Builds It, Everyone Dies. Still, it’s a jolt to hear this. It’s one thing to, say, write about cancer statistics and quite another to talk about coming to terms with a fatal diagnosis. I ask them how they think the end will come for them. Yudkowsky at first dodges the answer. “I don’t spend a lot of time picturing my demise, because it doesn’t seem like a helpful mental notion for dealing with the problem,” he says. Under pressure he relents. “I would guess suddenly falling over dead,” he says. “If you want a more accessible version, something about the size of a mosquito or maybe a dust mite landed on the back of my neck, and that’s that.”

The technicalities of his imagined fatal blow delivered by an AI-powered dust mite are inexplicable, and Yudowsky doesn’t think it’s worth the trouble to figure out how that would work. He probably couldn’t understand it anyway. Part of the book’s central argument is that superintelligence will come up with scientific stuff that we can’t comprehend any more than cave people could imagine microprocessors. Coauthor Soares also says he imagines the same thing will happen to him but adds that he, like Yudkowsky, doesn’t spend a lot of time dwelling on the particulars of his demise.

We Don’t Stand a Chance

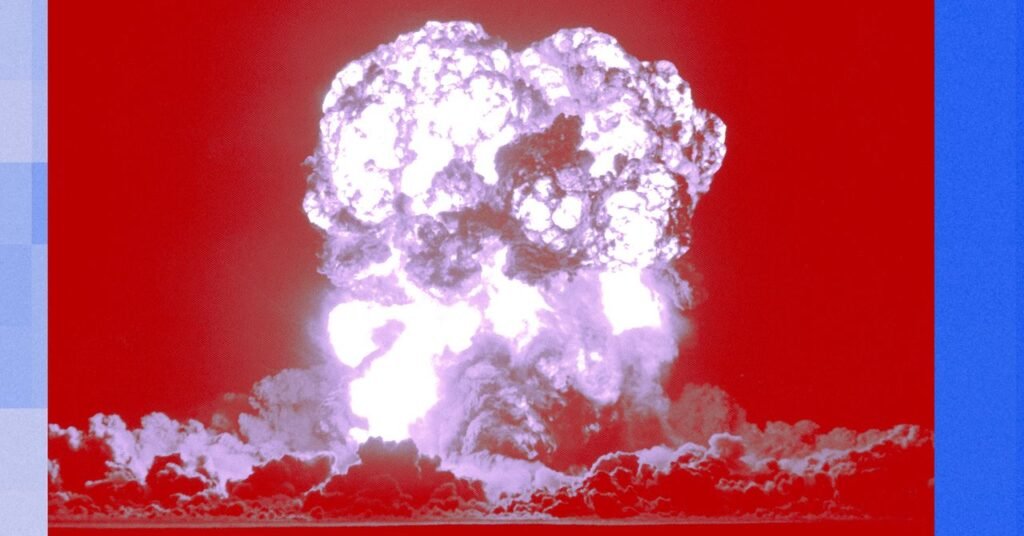

Reluctance to visualize the circumstances of their personal demise is an odd thing to hear from people who have just coauthored an entire book about everyone’s demise. For doomer-porn aficionados, If Anyone Builds It is appointment reading. After zipping through the book, I do understand the fuzziness of nailing down the method by which AI ends our lives and all human lives thereafter. The authors do speculate a bit. Boiling the oceans? Blocking out the sun? All guesses are probably wrong, because we’re locked into a 2025 mindset, and the AI will be thinking eons ahead.

Yudkowsky is AI’s most famous apostate, switching from researcher to grim reaper years ago. He’s even done a TED talk. After years of public debate, he and his coauthor have an answer for every counterargument launched against their dire prognostication. For starters, it might seem counterintuitive that our days are numbered by LLMs, which often stumble on simple arithmetic. Don’t be fooled, the authors says. “AIs won’t stay dumb forever,” they write. If you think that superintelligent AIs will respect boundaries humans draw, forget it, they say. Once models start teaching themselves to get smarter, AIs will develop “preferences” on their own that won’t align with what we humans want them to prefer. Eventually they won’t need us. They won’t be interested in us as conversation partners or even as pets. We’d be a nuisance, and they would set out to eliminate us.

The fight won’t be a fair one. They believe that at first AI might require human aid to build its own factories and labs–easily done by stealing money and bribing people to help it out. Then it will build stuff we can’t understand, and that stuff will end us. “One way or another,” write these authors, “the world fades to black.”

The authors see the book as kind of a shock treatment to jar humanity out of its complacence and adopt the drastic measures needed to stop this unimaginably bad conclusion. “I expect to die from this,” says Soares. “But the fight’s not over until you’re actually dead.” Too bad, then, that the solutions they propose to stop the devastation seem even more far-fetched than the idea that software will murder us all. It all boils down to this: Hit the brakes. Monitor data centers to make sure that they’re not nurturing superintelligence. Bomb those that aren’t following the rules. Stop publishing papers with ideas that accelerate the march to superintelligence. Would they have banned, I ask them, the 2017 paper on transformers that kicked off the generative AI movement. Oh yes, they would have, they respond. Instead of Chat-GPT, they want Ciao-GPT. Good luck stopping this trillion-dollar industry.

Playing the Odds

Personally, I don’t see my own light snuffed by a bite in the neck by some super-advanced dust mote. Even after reading this book, I don’t think it’s likely that AI will kill us all. Yudksowky has previously dabbled in Harry Potter fan-fiction, and the fanciful extinction scenarios he spins are too weird for my puny human brain to accept. My guess is that even if superintelligence does want to get rid of us, it will stumble in enacting its genocidal plans. AI might be capable of whipping humans in a fight, but I’ll bet against it in a battle with Murphy’s law.

Still, the catastrophe theory doesn’t seem impossible, especially since no one has really set a ceiling for how smart AI can become. Also studies show that advanced AI has picked up a lot of humanity’s nasty attributes, even contemplating blackmail to stave off retraining, in one experiment. It’s also disturbing that some researchers who spend their lives building and improving AI think there’s a nontrivial chance that the worst can happen. One survey indicated that almost half the AI scientists responding pegged the odds of a species wipeout as 10 percent chance or higher. If they believe that, it’s crazy that they go to work each day to make AGI happen.

My gut tells me the scenarios Yudkowsky and Soares spin are too bizarre to be true. But I can’t be sure they are wrong. Every author dreams of their book being an enduring classic. Not so much these two. If they are right, there will be no one around to read their book in the future. Just a lot of decomposing bodies that once felt a slight nip at the back of their necks, and the rest was silence.